The first thing that came to mind was using larger buffer sizes in file read and write to reduce overhead. I did a quick search on CodeProject and MSDN without finding anything. How did they achieve more than I was? I wiped the dust off of 45 years of software development and began a deeper consideration of system architecture and software processing.

After a lot of wrangling, they changed some settings and all of a sudden they were achieving 160GB/hour!

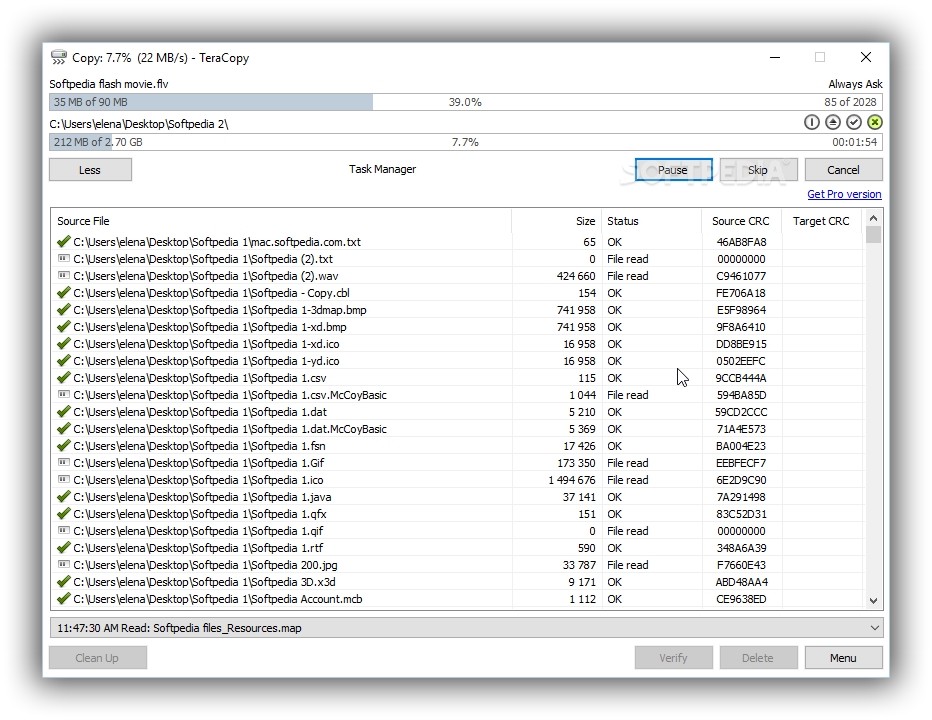

Then I discovered they were only achieving 5GB/hour writing back. I was only achieving 25GB/hour so I was beginning to get worried. An outside vendor provided a program which handled the processing and sent them back to the data store. It was a two step process where I sent the file to a server where they were processed. I thought 1000 hours! That would take 40 days! Well, it was a lot of data so I better get started. Then I had to do a mass conversion of a 50TB data store of 15,000 files. I wasn't actually waiting for these files while they were going through various stages of processing so I didn't really care. I was wrong! I typically achieved transfer speeds of about 60GB/hour over a Gigabit network connection depending on other traffic and disk activity. NET had already written highly efficient code for me. I used File.Copy or File.Move making the typical assumption that Microsoft. I often need to transfer files about 5GB and larger from one location to another. I develop and maintain a Media Automation system for a satellite broadcaster.

0 kommentar(er)

0 kommentar(er)